You’re driving home from work when your phone buzzes—but it’s not a text, not a calendar reminder. It’s an alert: “Possible atrial fibrillation detected. Consider contacting your doctor.” Your heart skips a beat in more ways than one.

A decade ago, getting this alert would’ve required a trip to a cardiologist and possibly weeks of monitoring. A century ago, you wouldn’t even know there was a problem until it was too late. Today, your remote cardiac monitoring device can serve as your first line of defense. Thanks to advancements in detection, providers are catching more arrhythmias earlier, sometimes before symptoms appear.

So, how exactly did we get from heavy analog machines that required hospital stays to discreet sensors and cloud-based AI that stream data in near real-time? The answer spans more than a century of innovation—a timeline shaped by physics, code, databases, and, increasingly, software that can learn.

In this blog post, we’ll explore how each era pushed the boundaries of what arrhythmia detection could be, and what its future holds for both clinicians and patients.

From Galvanometers to GPUs: A Century-Long March Toward AI Arrhythmia Detection

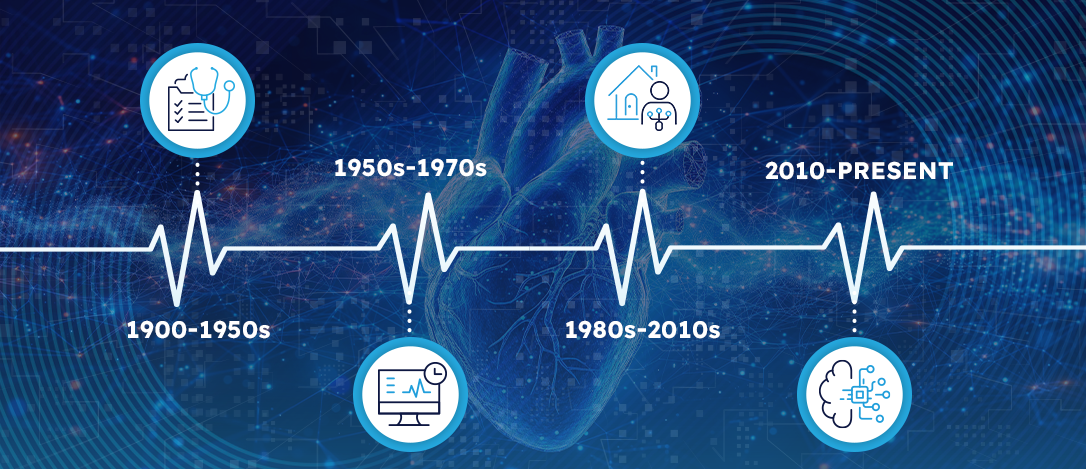

Over the past century, arrhythmia detection has evolved from bulky analog machines to sleek, AI-powered wearables. What began as basic signal capture has grown into near real-time, predictive monitoring—transforming how we understand and manage heart rhythm disorders.

Let’s take a look at the major milestones that brought us from early galvanometers to the cutting-edge tools of today.

1900-1950s: Analog Foundations

The journey of arrhythmia detection began with a spark of scientific ingenuity in the early 20th century.

In 1903, Dutch physiologist Willem Einthoven introduced the string galvanometer, a towering apparatus capable of capturing the heart’s electrical activity. With it came the concept of the first electrocardiogram (ECG) “leads,” setting a path for reading cardiac rhythms and earning Einthoven the 1924 Nobel Prize. For the first time, physicians could visualize the invisible: the heartbeat’s electrical language.

By the 1930s and 1940s, bedside monitoring made its way into surgical settings, as valve-based oscilloscopes enabled real-time rhythm observation during operations. But it was in 1949 that Physicist Norman “Jeff” Holter, alongside cardiologist Paul Dudley White, unveiled a 75-pound backpack ECG recorder, enabling ambulatory monitoring for the first time. By the early 1960s, this concept was refined into a commercial 1-lb Holter monitor, transforming patient diagnostics by capturing a full day of heart rhythms.

Although rudimentary, this marked the birth of portable rhythm surveillance, a foundational shift that would pave the way for future breakthroughs.

1950-1970s: The Digital Spark

The developments of the analog days gave way to a digital awakening. In 1959, the NIH’s Medical Systems Development Lab began digitizing ECG signals. Led by innovators like César Caceres, the team published some of the first rhythm-analysis algorithms in FORTRAN, translating heartbeats into code.

Just a few years later, in 1961, Holter’s original concept was commercialized. His 85-pound prototype shrank into a 1-pound cassette-based Holter monitor, capable of continuous 24-hour ECG recording. For the first time, clinicians could capture the full range of a patient’s daily rhythms, which was essential for spotting transient arrhythmias that would otherwise go undetected.

By the mid-1960s, computer-assisted ECG carts brought automated measurements to hospitals. The culmination came in the 1970s, when teams at MIT and Massachusetts General Hospital developed bedside monitors and algorithms that could automatically flag ectopy and pauses, which was proof-of-concept that software could triage cardiac events faster than clinicians.

As the groundwork for digital ECG interpretation solidified, the focus began to shift toward continuous, real-world monitoring and automated intervention—ushering in an era where detection wasn’t just diagnostic, but lifesaving.

1980-2010s: Always-On Monitoring

The approaching turn of the century ushered in a new paradigm: devices that not only recorded arrhythmias but responded to them in real-life settings outside of hospital walls.

In 1980, Dr. Michel Mirowski succeeded in the first human implantation of an implantable cardioverter-defibrillator (ICD). This device could detect and treat life-threatening ventricular arrhythmias, automatically delivering shocks when needed.

In parallel, researchers and engineers were building the back-end infrastructure that would fuel algorithmic progress in arrhythmia detection. The MIT-BIH Arrhythmia Database, released in 1980, became the first open dataset for algorithm testing, a crucial milestone for standardization and benchmarking. This was followed by the CSE Multilead Project in the mid-1980s, comparing commercial software outputs against cardiologist consensus, and exposing vast inter-vendor variability and the need for better logic.

During the 1990s, Academic NN studies were starting to apply back-propagation and Radial-Basis networks to MIT-BIH beats, inching past expert-system accuracy. Though brittle and sensitive to noise, these ML models outperformed rule-based systems on complex arrhythmias. And in 1998, Medtronic’s Reveal ILR emerged, a subcutaneous implant that could store ECGs for months, and eventually years, as opposed to mere days, capturing rare episodes of syncope or atrial fibrillation and transforming the diagnosis of sporadic events.

2010-Present: Near Real-Time & Cloud-Compatible

The 2010s saw smartphones become essential hubs for ECG viewing and data transfer, linking patient-captured signals to clinicians in near real time. They also saw an expansion of other everyday monitoring technology.

In 2018, the Apple Watch Series 4 became the first consumer device to receive De Novo FDA clearance for ECG recording, putting Atrial fibrillation (AFib) detection on millions of wrists and marking a turning point in how and where arrhythmias are identified. But while consumer-facing devices grabbed headlines, AI was rapidly evolving behind the scenes.

Just a year earlier, Stanford researchers unveiled a 34-layer deep learning model trained on over 90,000 ECGs that could outperform cardiologists across 12 rhythm classes, which cemented deep learning’s role in ECG interpretation.

By 2023, these capabilities left the lab and entered clinical practice. InfoBionic.Ai’s MoMe ARC® platform received 510(k) clearance, delivering multi-lead Bluetooth ECG and effectively turning remote patient monitoring into virtual telemetry.

Over the coming year, literature reviews forecast another leap: hybrid CNN/transformer models analyzing multi-sensor data streams, from ECG to PPG to motion and beyond. These tools aim to deliver not just detection, but also provide context-aware, real-time alerts that can anticipate arrhythmia episodes before they begin. In the 21st century, near real-time monitoring is no longer just reactive. It’s verging on predictive and becoming profoundly personalized.

The Clinical Impact: Why this Evolution Matters

The century-long march hasn’t just transformed patient outcomes; it has reshaped clinical workflows in ways that are both measurable and meaningful.

- Earlier AF Detection = Lower Stroke Risk. AFib increases the risk of stroke fivefold, yet it’s often silent and intermittent, making it notoriously difficult to catch early. The widespread use of continuous monitors and on-demand consumer ECG tools has enabled earlier detection, allowing timely initiation of anticoagulation therapy. According to the CDC, proper AF management could prevent thousands of strokes each year, potentially saving lives and reducing long-term disability.

- Faster, More Confident Diagnoses. Traditionally, diagnosing an arrhythmia relied on patients experiencing symptoms during a brief ECG window. Today’s extended-wear patches, implantables, and cloud-streamed sensors mean that clinicians have access to days, weeks, or even years of rhythm data. This shortens the time to diagnosis, especially for intermittent or asymptomatic cases, and often eliminates the need for repeat testing.

- Better Patient Adherence Through Comfort and Simplicity. Patients are more likely to wear—and trust—devices that fit seamlessly into their daily lives. Modern wearables are smaller, water resistant, and wire-free, improving comfort and increasing adherence to monitoring protocols. This leads to more complete datasets, fewer gaps in care, and higher diagnostic yields, particularly in outpatient settings.

- Analysis That Lightens the Load. In the past, and today, clinicians are often overwhelmed by the volume of data. Better analysis offers the ability to cut review time, reduce false positives, and ensure that serious arrhythmias aren’t missed—freeing up valuable time for clinicians to focus on care, not sorting through data.

Turning Progress into Better Patient Outcomes

As arrhythmia detection has evolved from paper tracings to AI-powered platforms, the impact on clinical care has been profound. But this evolution is about more than technological progress; it’s about making a measurable difference in people’s lives.

When it comes to arrhythmia detection, platforms like the MoMe ARC® are helping turn a century of progress into everyday practice—bringing hospital-grade monitoring to the point of need, without adding complexity.

Learn how InfoBionic.Ai is helping providers and patients stay connected to what matters most today—every heartbeat.